Embracing Pragmatism in Container Security

In the world of container orchestration, securing thousands of Docker containers is no small feat. But with a pragmatic approach and a keen understanding of risk assessment, it’s possible to create a secure environment that keeps pace with the rapid deployment of services.

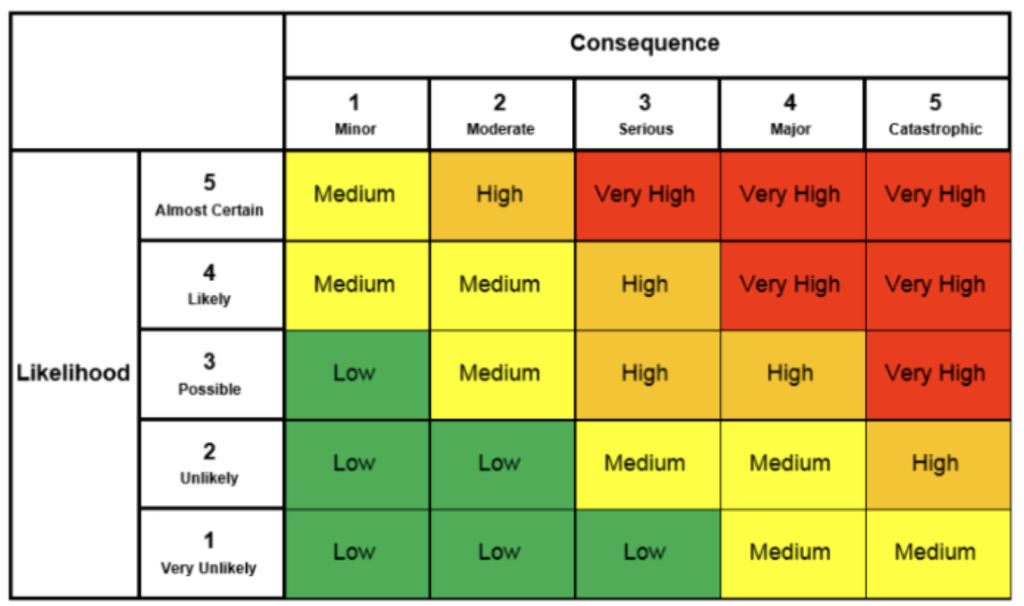

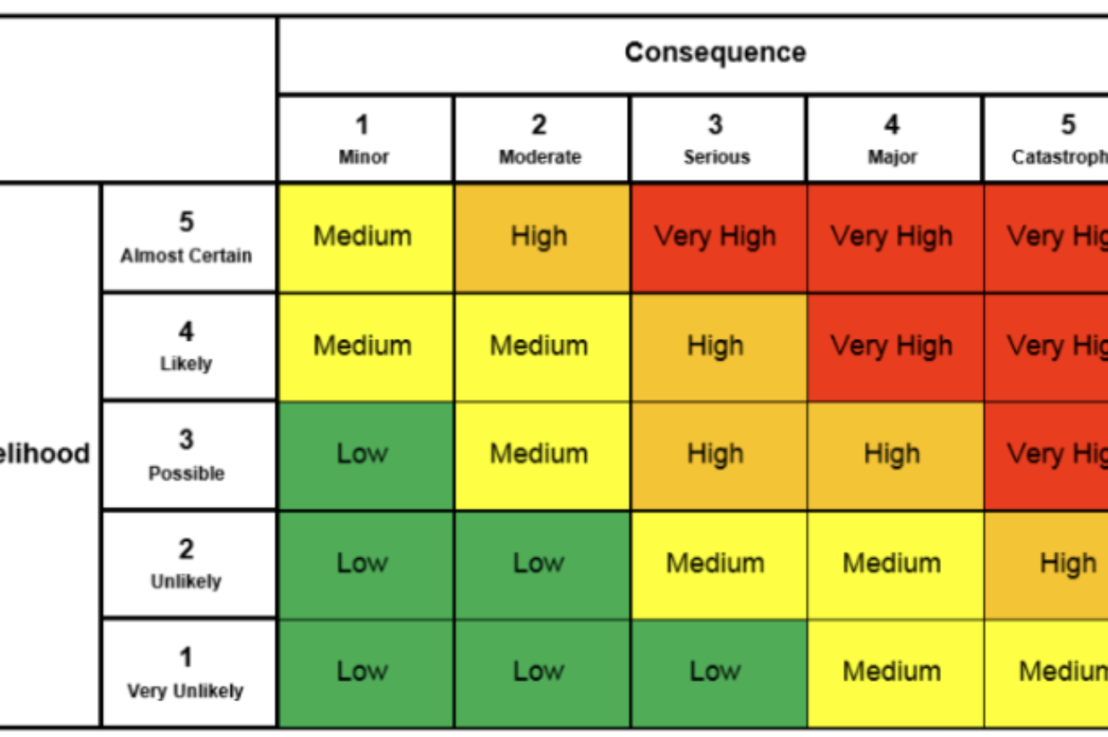

The Risk Matrix: A Tool for Prioritization

At the heart of our security strategy is a Risk Matrix, a critical tool that helps us assess and prioritize vulnerabilities. The matrix classifies potential security threats based on the severity of their consequences and the likelihood of their occurrence. By focusing on Critical and High Common Vulnerabilities and Exposures (CVEs), we use this matrix to identify which issues in our Kubernetes clusters need immediate attention.

Likelihood: The Critical Dimension for SMART Security

To ensure our security measures are Specific, Measurable, Achievable, Relevant, and Time-bound (SMART), we add another dimension to the matrix: Likelihood. This dimension helps us pinpoint high-risk items that require swift action, balancing the need for security with the practicalities of our day-to-day operations.

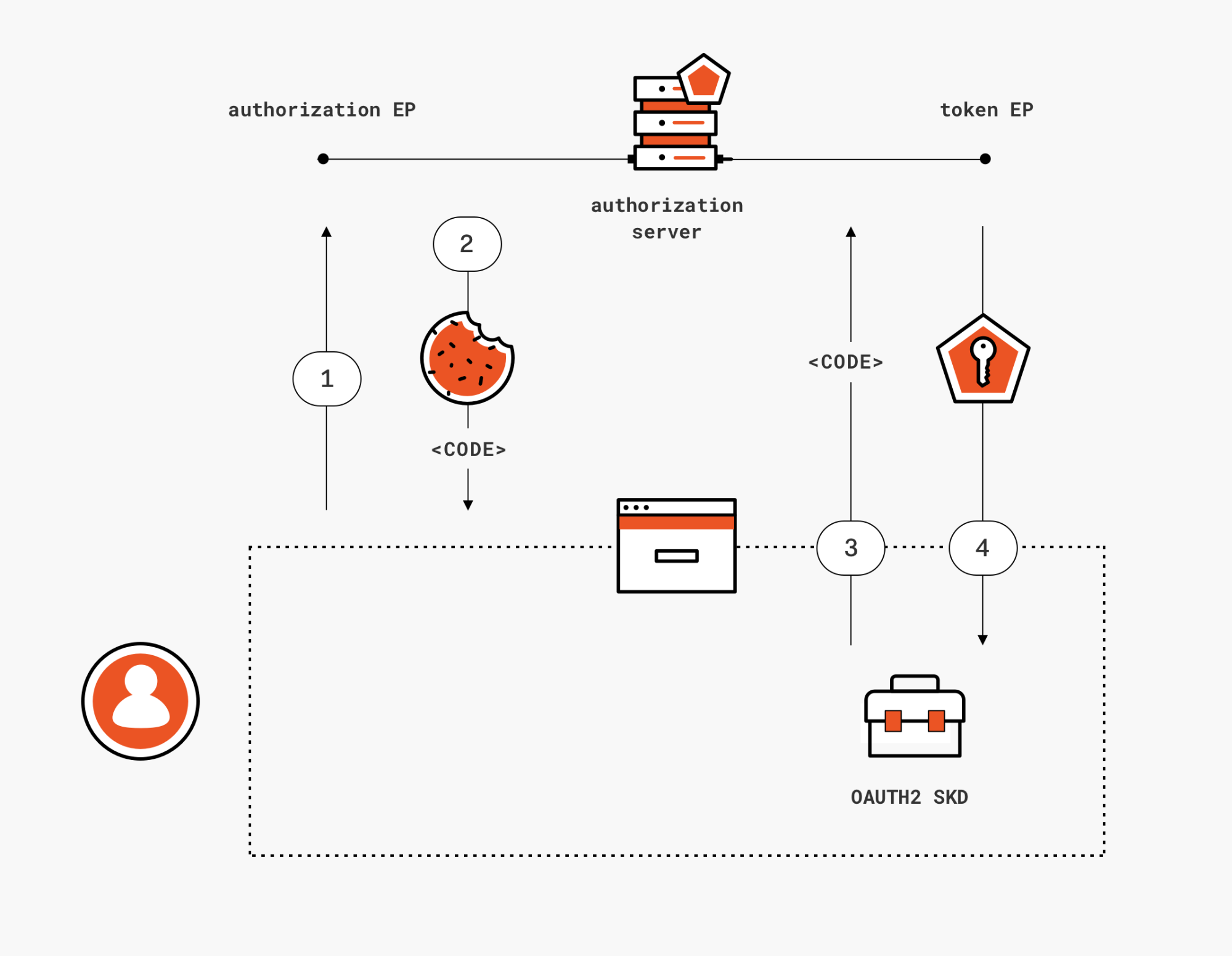

DevSecOps: Tactical Solutions for the Security-Minded

As we journey towards a DevSecOps culture, we often rely on tactical solutions to reinforce security, especially if the organization is not yet mature in TOGAF Security practices. These solutions are about integrating security into every step of the development process, ensuring that security is not an afterthought but a fundamental component of our container management strategy.

Container Base Images

Often, you might find Critical and High CVEs that are not under your control but are due to a 3rd party base image; take Cert-Manager, External-DNS as prime examples, the backbone of many Kubernentes Clusters in the wild. These images will rely on Google’s GoLang Images, which in turn use a base image from jammy-tiny-stack. You see where I am going here? Many 3rd party images can lead you down a rabbit hole.

Remember, the goal is to MANAGE RISK, not eradicate risk; the latter is futile and leads to impractical security management. Look at ways to mitigate risks by reducing public service ingress footprints or improving North/South and East/West firewall solutions such as Calico Cloud. This allows you to contain security threats if a network segment is breached.

False Positives

Many CVEs contradict the security severity ratings, so always look at the Risk and likelihood e.g.

Though not every CVE is removed from the images, we take CVEs seriously and try to ensure that images contain the most up-to-date packages available within a reasonable time frame. For many of the Official Images, a security analyzer, like Docker Scout or Clair might show CVEs, which can happen for a variety of reasons:

- The CVE has not been addressed in that particular image

- Upstream maintainers don’t consider a particular CVE to be a vulnerability that needs to be fixed and so won’t be fixed.

- e.g., CVE-2005-2541 is considered a High severity vulnerability, but in Debian is considered “intended behavior,” making it a feature, not a bug.

- The OS Security team only has so much available time and has to deprioritize some security fixes over others. This could be because the threat is considered low or that it is too intrusive to backport to the version in “stable”.e.g., CVE-2017-15804 is considered a High severity vulnerability, but in Debian it is marked as a “Minor issue” in Stretch and no fix is available.

- Vulnerabilities may not have an available patch, and so even though they’ve been identified, there is no current solution.

- Upstream maintainers don’t consider a particular CVE to be a vulnerability that needs to be fixed and so won’t be fixed.

- The listed CVE is a false positive

- In order to provide stability, most OS distributions take the fix for a security flaw out of the most recent version of the upstream software package and apply that fix to an older version of the package (known as backporting).e.g., CVE-2020-8169 shows that

curlis flawed in versions7.62.0though7.70.0and so is fixed in7.71.0. The version that has the fix applied in Debian Buster is7.64.0-4+deb10u2(see security-tracker.debian.org and DSA-4881-1). - The binary or library is not vulnerable because the vulnerable code is never executed. Security solutions make the assumption that if a dependency has a vulnerability, then the binary or library using the dependency is also vulnerable. This correctly reports vulnerabilities, but this simple approach can also lead to many false positives. It can be improved by using other tools to detect if the vulnerable functions are used.

govulncheckis one such tool made for Go based binaries.e.g., CVE-2023-28642 is a vulnerability in runc less than version1.1.5but shows up when scanning thegosu1.16 binary since runc1.1.0is a dependency. Runninggovulncheckagainstgosushows that it does not use any vulnerable runc functions.

- Is the image affected by the CVE at all? It might not be possible to trigger the vulnerability at all with this image.

- If the image is not supported by the security scanner, it uses wrong checks to determine whether a fix is included.

- e.g., For RPM-based OS images, the Red Hat package database is used to map CVEs to package versions. This causes severe mismatches on other RPM-based distros.

- This also leads to not showing CVEs which actually affect a given image.

- In order to provide stability, most OS distributions take the fix for a security flaw out of the most recent version of the upstream software package and apply that fix to an older version of the package (known as backporting).e.g., CVE-2020-8169 shows that

Conclusion

By combining a risk-based approach with practical solutions and an eye toward DevSecOps principles, we’re creating a robust security framework that’s both pragmatic and effective. It’s about understanding the risks, prioritizing them intelligently, and taking decisive action to secure our digital landscape.

The TOGAF® Series Guide focuses on integrating risk and security within an enterprise architecture. It provides guidance for security practitioners and enterprise architects on incorporating security and risk management into the TOGAF® framework. This includes aligning with standards like ISO/IEC 27001 for information security management and ISO 31000 for risk management principles. The guide emphasizes the importance of understanding risk in the context of achieving business objectives and promotes a balanced approach to managing both negative consequences and seizing positive opportunities. It highlights the need for a systematic approach, embedding security early in the system development lifecycle and ensuring continuous risk and security management throughout the enterprise architecture.

Sometimes, security has to be driven from the bottom up. Ideally, it should be driven from the top down, but we are all responsible for security; if you own many platforms and compute runtimes in the cloud, you must ensure you manage risk under your watch. Otherwise, it is only a matter of time before you get pwned, something I have witnessed repeatedly.

The Real World:

1. Secure your containers from the bottom up in your CICD pipelines with tools like Snyk

2. Secure your containers from the top down in your cloud infrastructure with tools like Azure Defender – Container Security

3. Look at ways to enforce the above through governance and policies; this means you REDUCE the likelihood of a threat occurring from both sides of the enterprise.

4. Ensure firewall policies are in place to segment your network so that a breach in one area will not fan out in other network segments. This means you must focus initially on North / South Traffic (Ingress/EgresS) and then on East / West traffic (Traversing your network segments and domains).

There is a plethora of other risk management strategies from Penetration Testing, using honey pots to SIEM, ultimately you all can make a difference no matter where in the technology chart you sit.

Principles

The underlying ingredient for establishing a vision for your reorganisation in the TOGAF framework is defining the principles of the enterprise. In my view, protecting customer data is not just a legal obligation; it’s a fundamental aspect of building trust and ensuring the longevity of an enterprise.

Establishing a TOGAF principle to protect customer data during the Vision stage of enterprise architecture development is crucial because it sets the tone for the entire organization’s approach to cybersecurity. It ensures that data protection is not an afterthought but a core driver of the enterprise’s strategic direction, technology choices, and operational processes. With cyber threats evolving rapidly, embedding a principle of customer data protection early on ensures that security measures are integrated throughout the enterprise from the ground up, leading to a more resilient and responsible business.

Sources:

GitHub – docker-library/faq: Frequently Asked Questions

You must be logged in to post a comment.