Hey Tech Wizards!

If you’ve ever wondered how enterprise architecture (EA) can be agile, you’re in for a treat. Let’s dive into the TOGAF® Series Guide on Enabling Enterprise Agility. This guide is not just about making EA more flexible; it’s about integrating agility into the very fabric of enterprise architecture.

First things first, agility in this context is all about being responsive to change, prioritizing value, and being practical. It’s about empowering teams, focusing on customer needs, and continuously improving. This isn’t just theory; it’s about applying these principles to real-life EA.

Agility at Different Levels of Architecture

Source: https://pubs.opengroup.org/togaf-standard/guides/enabling-enterprise-agility/

The guide stresses the importance of Enterprise Architecture in providing a structured yet adaptable framework for change. It’s about understanding and managing complexity, supporting continuous change, and minimizing risks.

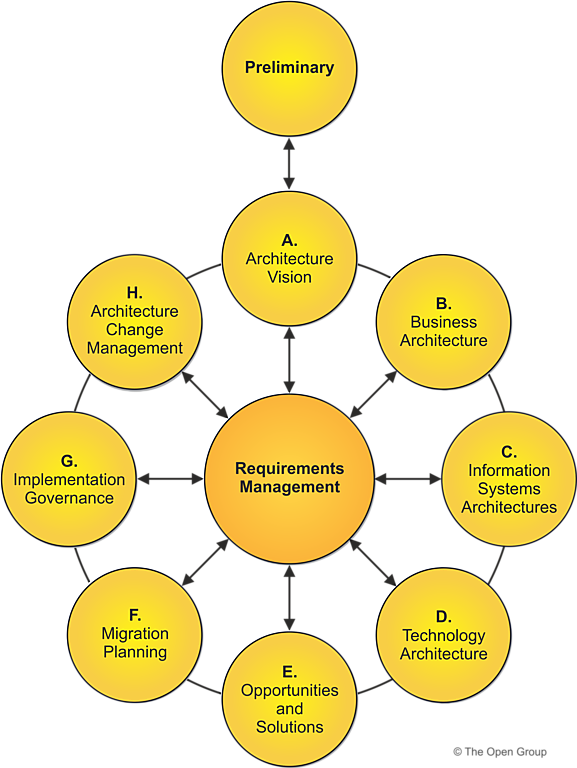

One of the core concepts here is the TOGAF Architecture Development Method (ADM). Contrary to popular belief, the ADM isn’t a rigid, waterfall process. It’s flexible and can be adapted for agility. The ADM doesn’t dictate a sequential process or specific phase durations; it’s a reference model defining what needs to be done to deliver structured and rational solutions.

The guide introduces a model with three levels of detail for partitioning architecture development: Enterprise Strategic Architecture, Segment Architecture, and Capability Architecture. Each level has its specific focus and detail, allowing for more manageable and responsive architecture development.

Transition Architectures play a crucial role in Agile environments. They are architecturally significant states, often including several capability increments, providing roadmaps to desired outcomes. They are key to managing risk and understanding incremental states of delivery, especially when implemented through Agile sprints.

The guide also talks about a hierarchy of ADM cycles, emphasizing that ADM phases need not proceed in sequence. This flexibility allows for concurrent work on different segments and capabilities, aligning with Agile principles.

Key takeaways for the tech-savvy:

- Enterprise Architecture and Agility can coexist and complement each other.

- The TOGAF ADM is a flexible framework that supports Agile methodologies.

- Architecture can be developed iteratively, with different levels of detail enabling agility.

- Transition Architectures are essential in managing risk and implementing Agile principles in EA.

- The hierarchy of ADM cycles allows for concurrent development across different architecture levels.

In short, this TOGAF Series Guide is a treasure trove for tech enthusiasts looking to merge EA with Agile principles. It’s about bringing structure and flexibility together, paving the way for a more responsive and value-driven approach to enterprise architecture. Happy architecting!

Sources:

https://pubs.opengroup.org/togaf-standard/guides/enabling-enterprise-agility/

You must be logged in to post a comment.