Introduction to Microservices Architecture (MSA) in the TOGAF® Framework

In the ever-evolving digital landscape, the TOGAF® Standard, developed by The Open Group, offers a comprehensive approach for managing and governing Microservices Architecture (MSA) within an enterprise. This guide is dedicated to understanding MSA within the context of the TOGAF® framework, providing insights into the creation and management of MSA and its alignment with business and IT cultures.

What is Microservices Architecture (MSA)?

MSA is a style of architecture where systems or applications are composed of independent and self-contained services. Unlike a product framework or platform, MSA is a strategy for building large, distributed systems. Each microservice in MSA is developed, deployed, and operated independently, focusing on a single business function and is self-contained, encapsulating all necessary IT resources. The key characteristics of MSA include service independence, single responsibility, and self-containment.

The Role of MSA in Enterprise Architecture

MSA plays a crucial role in simplifying business operations and enhancing interoperability within the business. This architecture style is especially beneficial in dynamic market environments where companies seek to manage complexity and enhance agility. The adoption of MSA leads to better system availability and scalability, two crucial drivers in modern business environments.

Aligning MSA with TOGAF® Standards

The TOGAF® Standard, with its comprehensive view of enterprise architecture, is well-suited to support MSA. It encompasses all business activities, capabilities, information, technology, and governance of the enterprise. The Preliminary Phase of TOGAF® focuses on determining the architecture’s scope and principles, which are essential for MSA development. This phase addresses the skills, capabilities, and governance required for MSA and ensures alignment with the overall enterprise architecture.

Implementing MSA in an Enterprise

Enterprises adopting MSA should integrate it with their architecture principles, acknowledging the benefits of resilience, scalability, and reliability. Whether adapting a legacy system or launching a new development, the implications for the organization and architecture governance are pivotal. The decision to adopt MSA principles should be consistent with the enterprise’s overall architectural direction.

Practical Examples

TOGAF is independent on directing what tools to use. However I have found it very useful to couple Domain Driven Design with Event Storming in a Miro Board where you can get all stakeholders together and nut out the various domain, subdomains and events.

https://www.eventstorming.com/

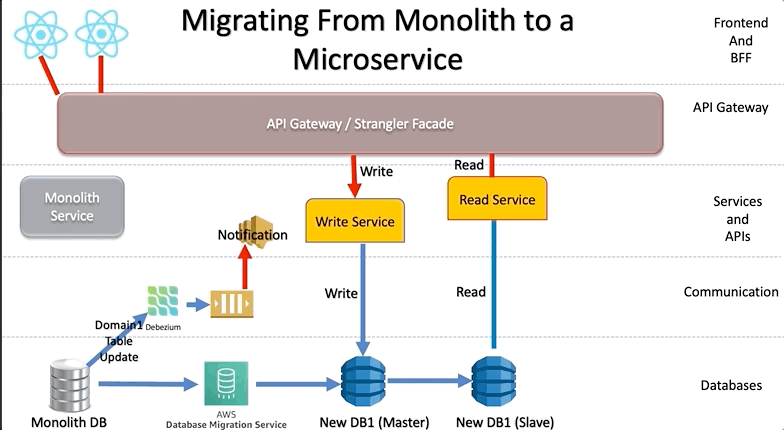

Within each domain, you can start working on ensuring data is independent as well, with patterns such as Strangler-Fig or IPC.

Extract a service from a monolith

After you identify the ideal service candidate, you must identify a way for both microservice and monolithic modules to coexist. One way to manage this coexistence is to introduce an inter-process communication (IPC) adapter, which can help the modules work together. Over time, the microservice takes on the load and eliminates the monolithic component. This incremental process reduces the risk of moving from the monolithic application to the new microservice because you can detect bugs or performance issues in a gradual fashion.

The following diagram shows how to implement the IPC approach:

Figure 2. An IPC adapter coordinates communication between the monolithic application and a microservices module.

In figure 2, module Z is the service candidate that you want to extract from the monolithic application. Modules X and Y are dependent upon module Z. Microservice modules X and Y use an IPC adapter in the monolithic application to communicate with module Z through a REST API.

The next document in this series, Interservice communication in a microservices setup, describes the Strangler Fig pattern and how to deconstruct a service from the monolith.

Learn more about these patterns here – https://cloud.google.com/architecture/microservices-architecture-refactoring-monoliths

Conclusion

When integrated with the TOGAF® framework, Microservices Architecture (MSA) provides a strong and adaptable method for handling intricate, distributed architectures. Implementing MSA enables businesses to boost their agility, scalability, and resilience, thereby increasing their capacity to adjust to shifting market trends.

During the vision phase, establish your fundamental principles.

Identify key business areas to concentrate on, such as E-Commerce or online services.

Utilize domain-driven design (code patterns) and event storming (practical approach) to delineate domains and subdomains, using this framework as a business reference model to establish the groundwork of your software architecture.

Develop migration strategies like IPC Adapters/Strangler Fig patterns for database decoupling.

In the Technology phase of the ADM, plan for container orchestration tools, for example, Kubernetes.

Subsequently, pass the project to Solution Architects to address the remaining non-functional requirements from observability to security during step F of the ADM. This enables them to define distinct work packages adhering to SMART principles.

TIP: When migrating databases sometimes the legacy will be the main data and the microservice DB will be the secondary until a full migration, do not underestimate tools like CDC to assist.

Change Data Capture (CDC) is an approach used by microservices for tracking changes made to the data in a database. It enables microservices to be notified of any modifications in the data so that they can be updated accordingly. This real-time mechanism saves a lot of time that would otherwise be spent on regular database scans. In this blog post, we will explore how CDC can be used with microservices and provide some practical use cases and examples.

References

https://www.lucidchart.com/blog/ddd-event-storming

https://cloud.google.com/architecture/microservices-architecture-refactoring-monoliths

https://learn.microsoft.com/en-us/azure/architecture/patterns/strangler-fig

You must be logged in to post a comment.